As the loyal readers among you may have noticed, there has been a lack of posting in the last week or so, but don’t worry, this trend shall soon be halted, as there are many new and exciting posts written, planned and on the way very soon.

This article is just to keep you in the loop and let you know what is going on.

Usually, I will endeavour to keep people up to date with updates to the blog via our social channels, specifically Facebook and Twitter. I feel the blog is a place for content, whilst notifying you about updating the ‘frills’ that are the design and functionality updates should be the place of social media.

Social Media Updates

Let me start by letting you know how our social side is currently evolving. A few months ago, Facebook stopped feed compatibility, meaning that if you liked us on Facebook, you no longer got updates regarding new posts. Now however I have linked Twitter to Facebook, so every tweet @tecbloggers tweets is also posted on our Facebook page.

UPDATE: We now tweet under the username @TechBloggers.

This means that you can now receive updates of new posts via your Facebook feed.

Some tweeters like to spam you with content every five minutes, likewise, update Facebook statuses practically all the time. I don’t believe in this, and only post/tweet an update should it be something you may want to know. Updates like small site improvements/issues and interesting content are the sort of thing we use our social media channels for.

Occasionally if I find, or someone brings to my attention something interesting that I think is worth sharing to the community, but doesn’t warrant a post, then it may get shared via social media. Don’t worry about getting spammed if you subscribe, we will only be posting stuff you probably want to know about.

I have also recently added a cover photo to our Facebook page, as it was looking a little bland. I didn’t have any great ideas, but I think it works for now 🙂 If you have any suggestions, by all means leave them in the comments below.

In future, I don’t plan on writing as many of these sort of posts, as I feel it is better to keep you informed via social media, of updates as and when they occur.

If you don’t want to miss out on future update news, subscribe now!

![]()

In other news…

Top Writers

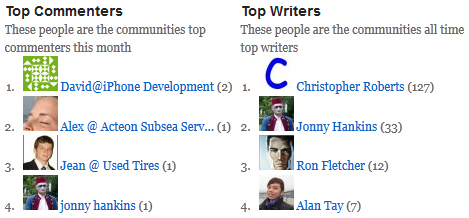

For a long time now we have had a top commenters widget at the bottom of every page, however the observant among you may have noticed the appearance of a new widget: a top writers list.

I came across the plugin whilst searching for something else, and thought it was a good idea. This is a community blog, so if we highlight the top commenters, why should writers not be recognised too? Well now they are 🙂

The top commenters list excludes administrators and resets every month, the top writers list doesn’t.

Design Updates

Technology Bloggers design is constantly being updated and tweaked. I believe that continuous improvement is important. Most of my time is spent writing and replying to comments, however I do dedicate some time to improving other areas of the site.

One recent update is the removal of the social icons from the sidebar, and the addition of a new set of social buttons to the header. I felt that this area needed a bit more colour, and the buttons bring just that!

More Speed!

A few weeks ago I posted on our social channels:

“Just moved servers in order to speed up the blog 🙂

Do you notice a difference?”

We encountered a few problems, however they were soon sorted out, leading me to later post:

“A few hiccups later, Technology Bloggers is fully functioning and faster than ever!”

The blogs response time was sometimes really quite slow (usually higher than 2000 miliseconds!). I moved the blog to a different server and the response time is now around a quarter of what it was, currently around the 550 ms mark.

That is one reason you may have noticed the blog loading faster, another is because of the relentless efforts that I have been putting into slimming things down and reducing load times.

Google’s Page Speed tools have been very useful, enabling me to see where the site lags, and what can be done to improve it. I think there may be an article on the way soon with more detail on Page Speed, and how I have and am still using the tools to speed up the blog. Watch this space.

Jonny

For a while now, Thursday here on the blog seems to have been Jonny’s day, with him posting a regular feature on a Thursday for more than ten weeks now.

The day is not a dedicated day to the writings of Mr Hankins, however at the moment, I feel it is good that the regular feature is on a fixed day, as it gives consistency. His articles are very popular, and it is a delight every Thursday looking to see what new and innovative topic he has chosen to cover.

Jonny has been busy travelling of late, meaning that last week he was unable to post. Don’t worry though, he already has an article written and lined up for us for tomorrow 🙂

Competition

Just a quick note about a competition I plan on launching next Monday. Technology Bloggers has teamed up with two other blogs, and hopefully will soon be launching a competition in which anyone bar the three prize donors can enter for a chance to win one of three $50 USD prizes in a $150 competition!

UPDATE: This will now launch on Tuesday.

Until Next Time

That’s about it from me now, so remember, if you want to keep up to date, be sure to subscribe to our social profiles, and stay tuned to the blog to see our exciting future unfold…